¶ Gromacs

We're using a gromacs benchmarking dataset and the nvidia gromacs image for GPU capability.

[https://catalog.ngc.nvidia.com/orgs/hpc/containers/gromacs]

Pull the images

mkdir -p ~/.singularity

cd ~/.singularity

# non gpu

singularity pull docker://gromacs/gromacs:2022.2

# gpus

singularity pull docker://nvcr.io/hpc/gromacs:2022.3

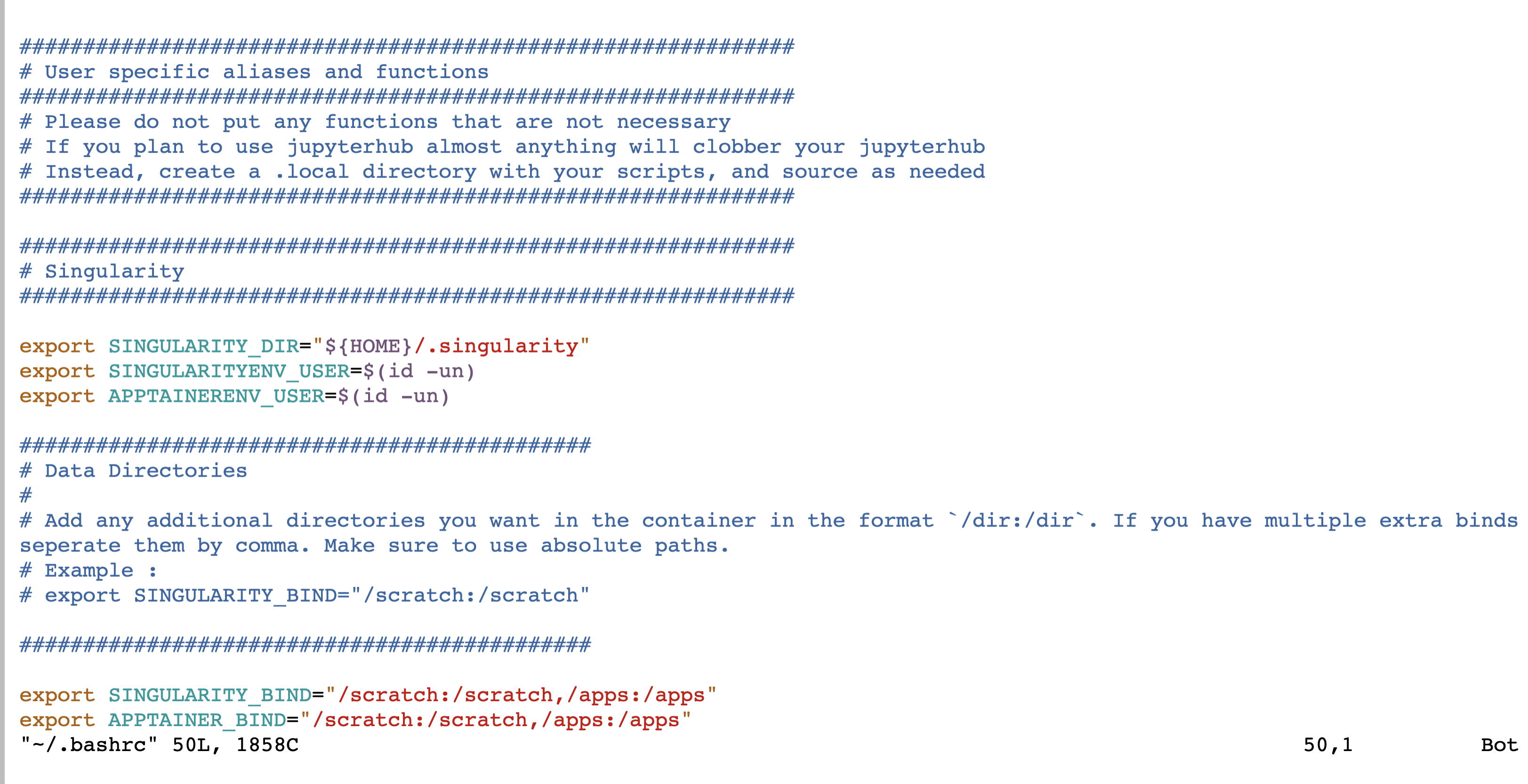

¶ Optional - Add Singularity to ~/.bashrc

It's recommended to add a few variables to your ~/.bashrc.

export SINGULARITY_DIR="${HOME}/.singularity"

export SINGULARITYENV_USER=$(id -un)

export APPTAINERENV_USER=$(id -un)

#############################################

# Data Directories

#

# Add any additional directories you want in the container in the format `/dir:/dir`. If you have multiple extra binds seperate them by comma. Make sure to use absolute paths.

# Example :

# export SINGULARITY_BIND="/scratch:/scratch"

#############################################

export EXTRA_BINDS=""

export SINGULARITY_BIND="/scratch:/scratch,/apps:/apps"

export APPTAINER_BIND="/scratch:/scratch,/apps:/apps"

¶ GPU - Test Run

Use the sample dataset for a test run. If you are on a GPU instance you should see a signficant speed up.

We will be running singularity with the --nv flag.

export SINGULARITY_DIR="${HOME}/.singularity"

export SINGULARITYENV_USER=$(id -un)

#############################################

# Data Directories

#

# Add any additional directories you want in the container in the format `/dir:/dir`. If you have multiple extra binds seperate them by comma. Make sure to use absolute paths.

# Example :

# export SINGULARITY_BIND="/scratch:/scratch"

#############################################

export EXTRA_BINDS=""

export SINGULARITY_BIND="/scratch:/scratch,/apps:/apps"

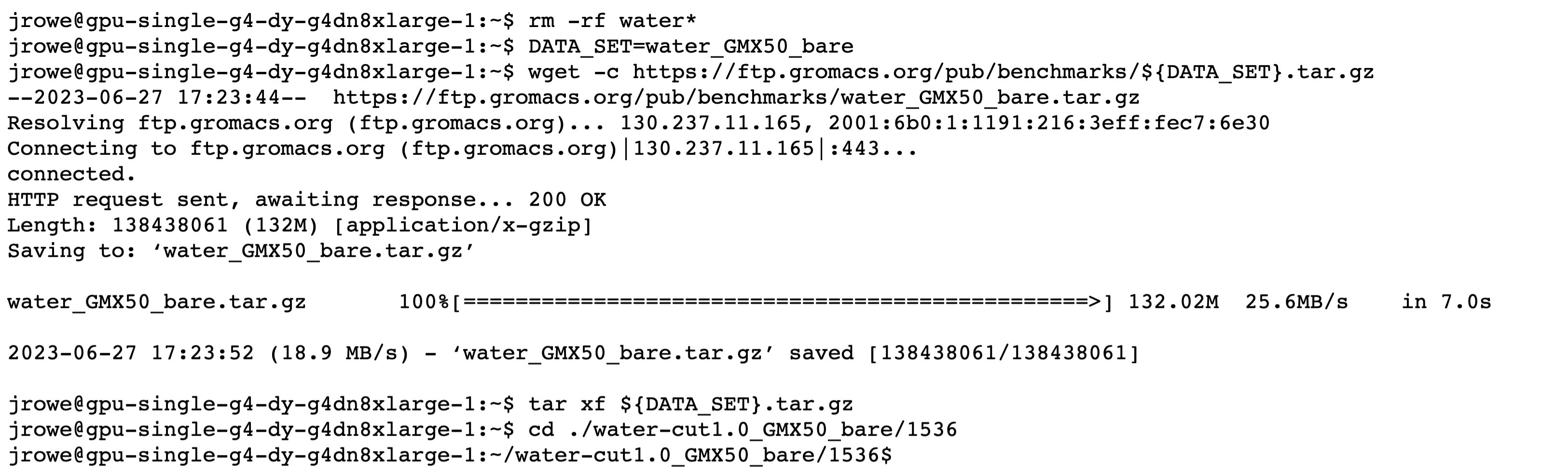

DATA_SET=water_GMX50_bare

wget -c https://ftp.gromacs.org/pub/benchmarks/${DATA_SET}.tar.gz

tar xf ${DATA_SET}.tar.gz

cd ./water-cut1.0_GMX50_bare/1536

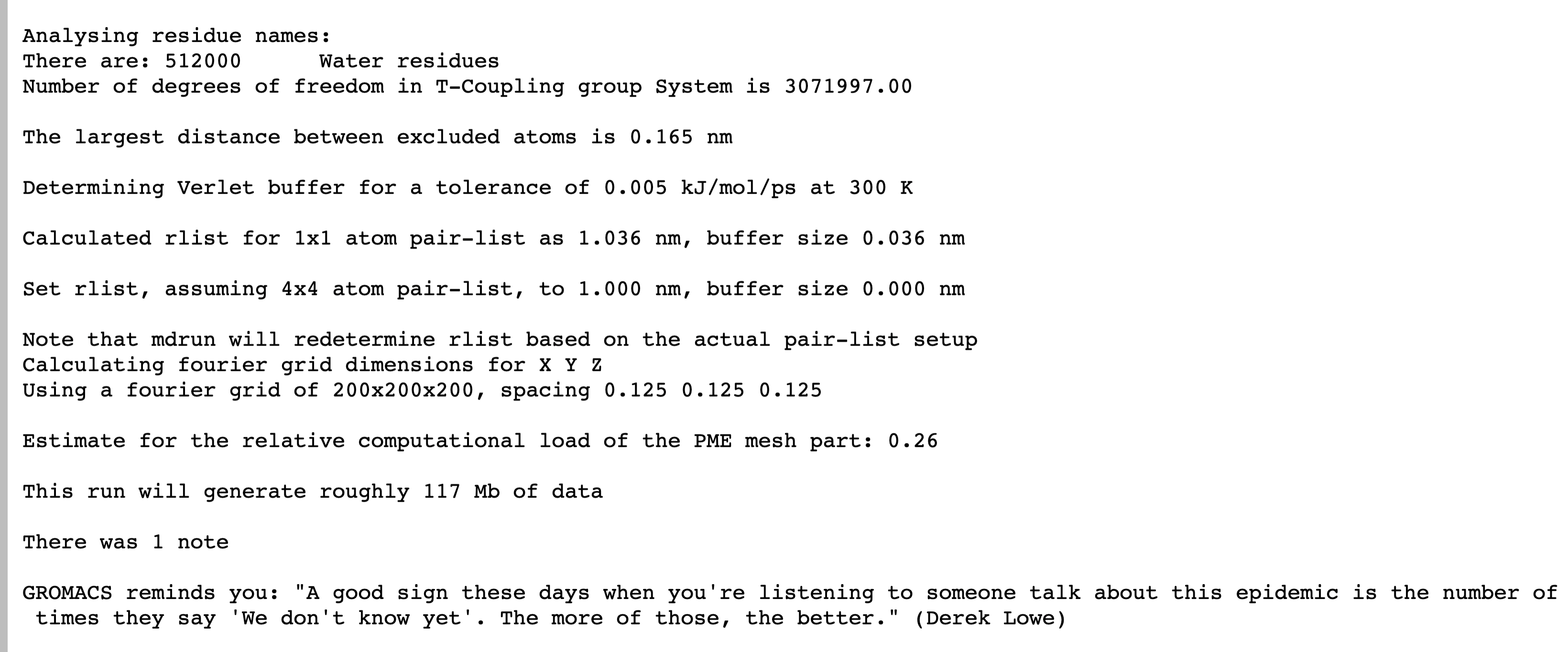

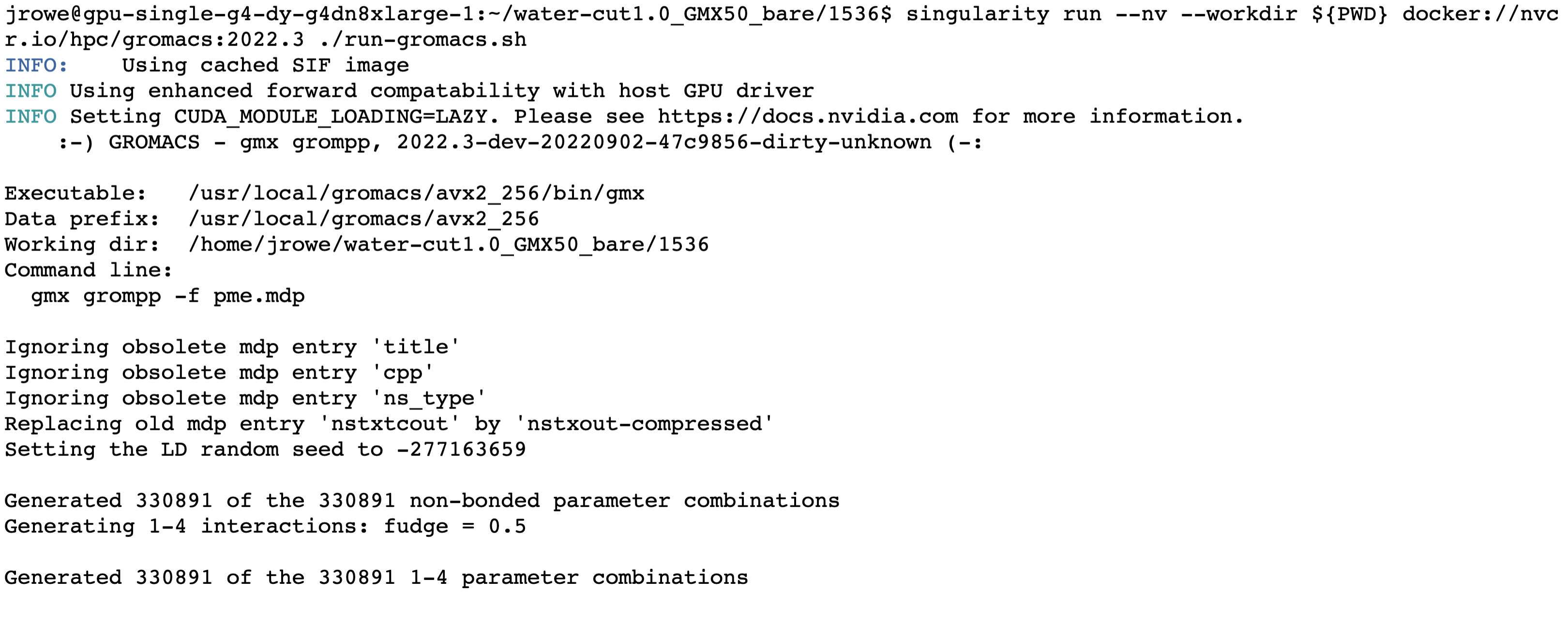

singularity run --nv --workdir ${PWD} docker://nvcr.io/hpc/gromacs:2022.3 gmx grompp -f pme.mdp

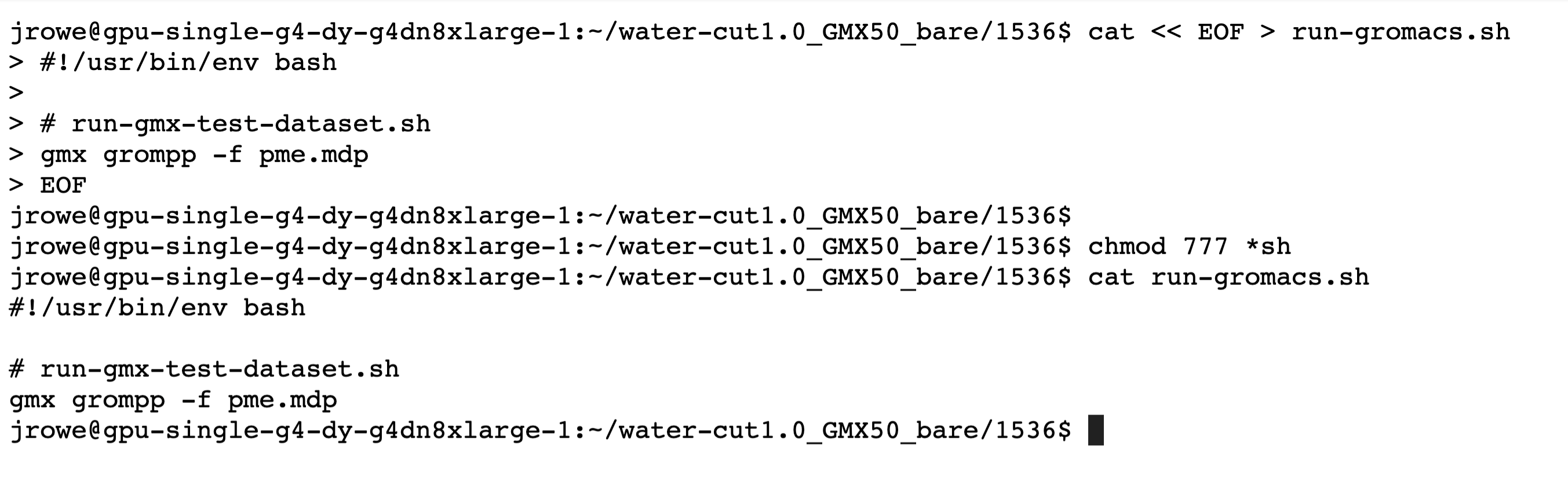

For practice, add the gmx grompp command to a shell script and run the shell script.

In order for this method to work you must have the correct directories set in SINGULARITY_BIND.

It's also recommended that you use full paths to all datasets and shell scripts instead of relative.

cat << EOF > run-gromacs.sh

#!/usr/bin/env bash

# run-gmx-test-dataset.sh

gmx grompp -f pme.mdp

EOF

chmod 777 *sh

singularity run --nv --workdir ${PWD} docker://nvcr.io/hpc/gromacs:2022.3 ./run-gromacs.sh

¶ Shell Script

Here is a wrap up shell script you can use that has everything we just covered.

#!/usr/bin/env bash

export SINGULARITY_DIR="${HOME}/.singularity"

export SINGULARITYENV_USER=$(id -un)

#############################################

# Data Directories

#

# Add any additional directories you want in the container in the format `/dir:/dir`. If you have multiple extra binds seperate them by comma. Make sure to use absolute paths.

# Example :

# export SINGULARITY_BIND="/scratch:/scratch"

#############################################

export EXTRA_BINDS=""

export SINGULARITY_BIND="/scratch:/scratch,/apps:/apps"

#############################################

# Specify your container

#

# Pull any containers. Here are a few to get you started.

# Example:

# export IMAGE="${HOME}/.singularity/r-tidyverse_4.2.2.sif"

#############################################

export IMAGE="${HOME}/.singularity/gromacs_2022.3.sif"

singularity run --nv --workdir ${PWD} docker://nvcr.io/hpc/gromacs:2022.3 YOUR_COMMAND

¶ Troubleshooting

¶ Command not found

Make sure your command or shell script is executable (chmod 777 *sh)

¶ File not found

Make sure you have the correct volumes available to the container.

Update this variable -

export SINGULARITY_BIND="/scratch:/scratch,/apps:/apps"

To match your system. For example, maybe you have /data or /shared instead of /scratch, or you want to add additional tmp storage by binding /tmp.

¶ Best Practices

- Use complete file paths instead of relative.

- Make all

sh,py, etc scripts executable withchmod 777 *sh. - For more complex pipelines it may be recommended to use Nextflow to wrap your singularity commands.

¶ Resources

Singularity + GPU [https://docs.sylabs.io/guides/3.5/user-guide/gpu.html#gpu-support-nvidia-cuda-amd-rocm]

Nextflow + Singularity [https://www.nextflow.io/docs/latest/singularity.html]

GPU Enabled Containers [https://catalog.ngc.nvidia.com/containers]

¶ Gromacs Tags

View all gromacs tags here.